I’ve been using PopClip well.

I recently installed Ollama locally to replace ChatGPT. Ollama is an LLM that you install locally and can be used like the link below.

https://github.com/ollama/ollama/blob/main/docs/api.md

I modified the ChatGPT popclip source to fix the api connection part.

// #popclip

// name: Ollama Chat

// icon: ollama.png

// identifier: js.popclip.extension.ollama.chat

// description: Send the selected text to Ollama LOCAL API and append the response.

// app: { name: Ollama, link: 'https://github.com/ollama/ollama/blob/main/docs/api.md' }

// popclipVersion: 4586

// keywords: Ollama chat

// entitlements: [network]

// language: typescript

import axios from "axios";

export const options: Option[] = [

{

identifier: "model",

label: "Model",

type: "multiple",

defaultValue: "mistral",

values: ["mistral", "another-model"],

},

{

identifier: "systemMessage",

label: "System Message",

type: "string",

description:

"Optional system message to specify the behaviour of the AI agent.",

},

{

identifier: "resetMinutes",

label: "Reset Timer (minutes)",

type: "string",

description:

"Reset the conversation if idle for this many minutes. Set blank to disable.",

defaultValue: "15",

},

{

identifier: "showReset",

label: "Show Reset Button",

type: "boolean",

icon: "broom-icon.svg",

description: "Show a button to reset the conversation.",

},

];

type OptionsShape = {

resetMinutes: string;

model: string;

systemMessage: string;

showReset: boolean;

};

// typescript interfaces for Ollama API

interface Message {

role: "user" | "system" | "assistant";

content: string;

}

interface Response {

model: string;

created_at: string;

response: string;

done: boolean;

done_reason: string;

context: Array<number>;

total_duration: number;

load_duration: number;

prompt_eval_count: number;

prompt_eval_duration: number;

eval_count: number;

eval_duration: number;

}

// the extension keeps the message history in memory

const messages: Array<Message> = [];

// the last chat date

let lastChat: Date = new Date();

// reset the history

function reset() {

print("Resetting chat history");

messages.length = 0;

}

// get the content of the last `n` messages from the chat, trimmed and separated by double newlines

function getTranscript(n: number): string {

return messages

.slice(-n)

.map((m) => m.content.trim())

.join("\n\n");

}

// the main chat action

const chat: ActionFunction<OptionsShape> = async (input, options) => {

const ollama = axios.create({

baseURL: "http://localhost:11434/api",

});

// if the last chat was long enough ago, reset the history

if (options.resetMinutes.length > 0) {

const resetInterval = parseInt(options.resetMinutes) * 1000 * 60;

if (new Date().getTime() - lastChat.getTime() > resetInterval) {

reset();

}

}

if (messages.length === 0) {

// add the system message to the start of the conversation

let systemMessage = options.systemMessage.trim();

if (systemMessage) {

messages.push({ role: "system", content: systemMessage });

}

}

// add the new message to the history

messages.push({ role: "user", content: input.text.trim() });

// send the whole message history to Ollama

try {

const { data }: { data: Array<Response> } = await ollama.post("/generate", {

model: options.model || "mistral",

prompt: getTranscript(messages.length),

format: "json",

stream: false,

});

// combine the responses

const combinedResponse = data.response;

// add the response to the history

messages.push({ role: "assistant", content: combinedResponse });

lastChat = new Date();

// if holding shift and alt, paste just the response.

// if holding shift, copy just the response.

// else, paste the last input and response.

if (popclip.modifiers.shift && popclip.modifiers.option) {

popclip.pasteText(getTranscript(1));

} else if (popclip.modifiers.shift) {

popclip.copyText(getTranscript(1));

} else {

popclip.pasteText(getTranscript(2));

popclip.showSuccess();

}

} catch (e) {

popclip.showText(getErrorInfo(e));

}

};

export function getErrorInfo(error: unknown): string {

if (typeof error === "object" && error !== null && "response" in error) {

const response = (error as any).response;

return `Message from Ollama (code ${response.status}): ${response.data.error.message}`;

} else {

return String(error);

}

}

// export the actions

export const actions: Action<OptionsShape>[] = [

{

title: "Chat",

code: chat,

},

{

title: "Reset Chat",

icon: "broom-icon.svg",

stayVisible: true,

requirements: ["option-showReset=1"],

code: reset,

},

];

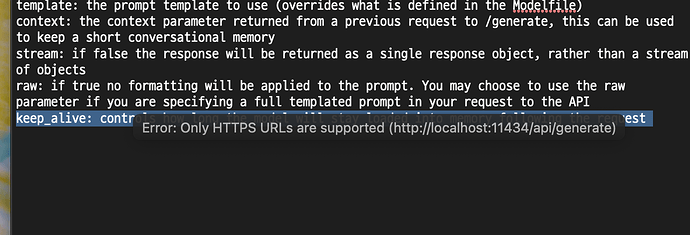

When executed, the following error occurs

Only HTTPS connections are allowed, causing HTTP connections to fail. I was wondering if there is a workaround.